The department operates two GPU servers along with a server containig 40 CPU cores. These servers support projects and class assignments in neural networks, machine learning, and parallel computation.

Gaining Access

Access to the GPU servers is provided to students in specific classes or those working on projects or capstones that require GPU support. If you are enrolled in a class that will use the GPU servers access information and instructions will be provided by the instructor. If you are working on a project or capstone that will require GPU support you should request access through your project advisor.

The Servers

The Department's three HPC servers are

- volta.cs.uwlax.edu - four Volta class V100, SMX2 bus, each with 16GB of HBM2 memory, two Intel Silver 4114 CPUs

- ampere.cs.uwlax.edu - one Ampere class A100, PCIe Gen4 bus with 40GB of memory, two AMD EPYC 7352 CPUs

- cores.cs.uwlax.edu - 40 2.2 GHz Intel Xeon CPU cores

If you have been granted access you can login to the servers using ssh and you campus NetID credentials. Your home directory on ampere will be the same NFS mounted home directory used by the iMacs in the CS Lab and on the other department servers such as compute.cs.uwlax.edu.

Since the home directory is mounted over NFS it is important to understand that performance may be adversely effected if your project involves frequently writting large amounts of data to the filesystem, such as log files or checkpointing data. If this is the case you may be able to reduce the impact by changing the frequency at which data is written. If you are using code obtained from an example make sure you completely understand its behavior in this regard. If it is necessary to keep a large amount of such data, local storage on the server can be provided for your project so as to improve performance.

Scp or rsync are good options for moving code/data between your network home directory and your personal computer.

TensorFlow

TensorFlow is a frequently used, python based, system for developing and training neural network / machine learning models. There are a number of other systems built on top of TensorFlow. Many tutorials, such as https://www.tensorflow.org/tutorials, are available. The following code is taken from the TensorFlow Beginner Tutorial. It builds a model to recognize handwritten digits as contained in the MNIST database.

import tensorflow as tf

# access the MNIST database of handwritten digits

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

# define a neural network model consisting of several layers

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dropout(0.2),

tf.keras.layers.Dense(10)

])

# access the model predications

predictions = model(x_train[:1]).numpy()

# define a loss function that measures predication accuracy

loss_fn = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True)

# compile the model for processing

model.compile(optimizer='adam',

loss=loss_fn,

metrics=['accuracy'])

# fit the model to the training dataset using 5 training epochs

model.fit(x_train, y_train, epochs=5)

# evaluate the model on a test set of data separate from the training set

model.evaluate(x_test, y_test, verbose=2)After connecting to one of the GPU servers using ssh and copying this code to a file named beginner.py, it can be executed with python3 beginner.py. This will produce the following output.

Epoch 1/5

1875/1875 [==============================] - 3s 2ms/step - loss: 0.2881 - accuracy: 0.9156

Epoch 2/5

1875/1875 [==============================] - 3s 2ms/step - loss: 0.1410 - accuracy: 0.9578

Epoch 3/5

1875/1875 [==============================] - 3s 2ms/step - loss: 0.1038 - accuracy: 0.9687

Epoch 4/5

1875/1875 [==============================] - 3s 2ms/step - loss: 0.0885 - accuracy: 0.9728

Epoch 5/5

1875/1875 [==============================] - 3s 2ms/step - loss: 0.0753 - accuracy: 0.9758

313/313 - 0s - loss: 0.0721 - accuracy: 0.9784This shows that after the first round of training the network obtained an accuracy on the training data of 91% which improved to 97% after the fifth round. When measured on the test set of data it also obtained an accuracy of 97%.

This model trains very quickly. Larger models on more complicated datasets may run for several days or longer. If you expect to have a very long running training sessions you will need to put the process into the background and direct any important output to a file so that the ssh connection to the server can be terminated while the training process continues to run. There are several options for doing this. Please seek help through your advisor.

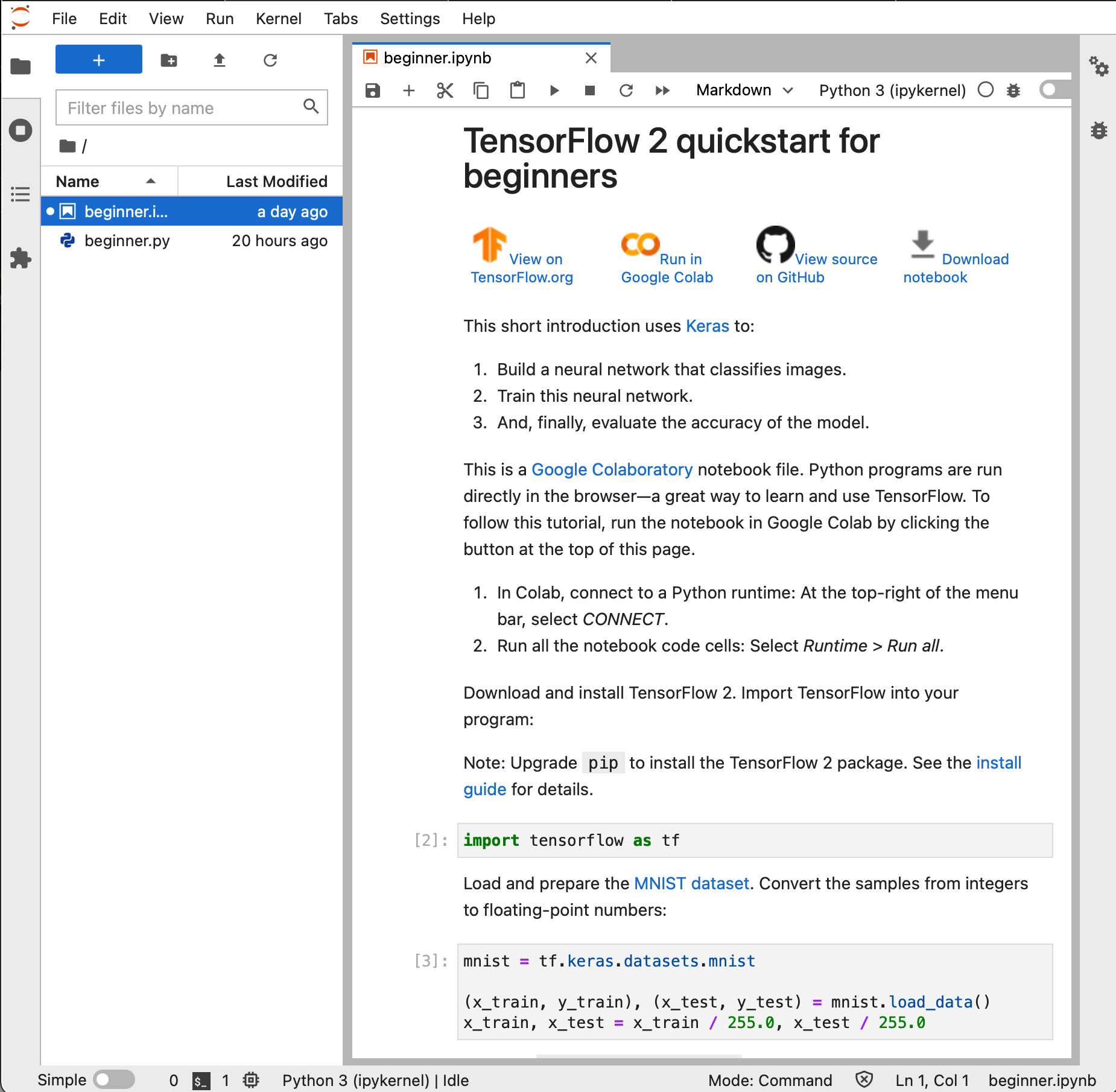

JupyterLab and Notebooks

An alternative to executing tensorflow python programs from the command line JupyterLab. JupyterLab provides a web-based interface for working with "notebooks". A notebook consists of a sequence of cells each of which contains a fragment of code (in python or possibly other languages), output, graphs and notes. Individual cells of code can either be run interactively or all of the code cells in a notebook can be run together.

JupyterLab is a web server running with your user credentials and with access to the files in your directory. It is also able to start "computation kernels" running on the server to execute the code cells in a notebook. To use JupyterLab, login to a GPU machine using ssh. Change to the location where you keep projects notebooks (a subdirectory of your home directory) with cd my-notebook-subdir, then execute jupyter-lab (the old notebook interface can be obtained with jupyter notebook). This will generate some log messages as it starts up. Towards the end of this output there will be a URL of the form

http://ampere:8888/lab?token=0336db34279e0bd35a96e2fff8f507a25fbfb5d77c048957which you can use from a browser on your local computer to connect to the JupyterLab server. Note, the URL contains only the hostname (e.g. ampere) instead of the full name (e.g. ampere.cs.uwlax.edu). Unless your local machine is configured to search the cs.uwlax.edu domain for hosts, you will need to edit the URL to include the full name. The port number maybe different than 8888 if other people on the server are also running JupyterLab. The token is a secret string to authenticate to the jupyter-lab server. Once connected you will see this interface

The left column shows two files in the notebook directory: beginner.py - the python program from the previous section and beginner.ipynb the full notebook from the TensorFlow Beginner Tutorial. On the right an editor for the notebook is open. This notebook interface is very convenient for experimenting with code and keeping explanatory notes. It is less useful if processes will run for a long time and produce a large amounts of output.

When done, save any open notebooks, Ctl-C the jupyter-lab process and Ctl-D the ssh session.

Useful GPU Related Commands

nvidia-smi - "Nvidia System Management Interface", provides information on the GPU and its current load.

$ nvidia-smi

Thu Sep 2 14:04:46 2021

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 470.57.02 Driver Version: 470.57.02 CUDA Version: 11.4 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA A100-PCI... On | 00000000:81:00.0 Off | 0 |

| N/A 33C P0 40W / 250W | 39326MiB / 40536MiB | 23% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 86135 C python3 39323MiB |

+-----------------------------------------------------------------------------+